Engineers just want to enjoy their weekend. But outages, hardware failures, and usage spikes can all lead to the dreaded PagerDuty alert, especially when the system being affected is something as critical as your metadata database.

Team morale aside, incidents affecting critical systems like your user metadata store cost time and money, and they distract your team from focusing on what actually matters – building and improving the features that drive your business.

Whether you’re considering a migration due to issues with your existing metadata store or you’re architecting a new application from the ground up, it pays to spend some time thinking about exactly what is required to build a user metadata store that will work at enterprise scale without ruining everybody’s weekend.

What is user metadata?

User metadata goes by many names, but what we’re talking about here is the data that’s associated with a specific user’s account that’s stored in a metadata store. Typically, this would include things such as user preferences, usage history, access level and privileges, and sometimes even logins and passwords, although this is also sometimes stored in a separate database dedicated to identity verification and access management (IAM).

Consider, for example, the data a massive ecommerce site might store about its users. Broadly, we might break this data down into three types, to be stored in three separate systems:

- IAM data such as usernames and password hashes.

- User metadata such as address, contact information, wish lists, payment methods, browsing history, etc.

- *Transactional data including payments, returns, shipments, etc.

These are not hard-and-fast divisions; in some cases IAM and user metadata are stored together. At some companies, transactional may be considered a type of user metadata, or some of the user metadata such as browsing history may be considered transactional.

In general, though, when we talk about building a user metadata store, we’re talking about building a system that holds critical data related to each user; data that the application relies on every time the user logs in to ensure a consistent and personalized experience.

Factors to consider for an enterprise user metadata store

Whether you’re planning to build a bespoke system from the ground up or evaluating different DBMS to serve as the backbone of your user metadata store, if you’re operating at enterprise scale – or aspire to be – there are a number of important factors to consider.

Always-on availability

First and most important: the system has to be available. Because the user metadata store enables significant parts of the overall user experience in almost any application, if it goes offline, the application experience is (at best) going to be severely degraded. Building a metadata store that can’t guarantee high availability is a recipe for producing those dreaded PagerDuty alerts and the user churn and project delays that come along with them.

At enterprise scale, high availability means:

- Embracing distributed architecture. Even the best hardware is still susceptible to natural disasters. In many cloud regions, availability zones are geographically close enough that the same event can impactan impact multiple AZs, so in practice this often means embracing a multi-region or even multi-cloud solution for your user metadata store.

- Minimizing scheduled downtime. Legacy database systems often must be taken offline to perform operations such as software upgrades or schema changes. This downtime is predictable and thus typically less costly than unscheduled downtime, but it still has costs. And your team probably isn’t going to be any more excited by the idea of a 3:00 AM DBMS update shift than they are about the weekend PagerDuty alarm.

Performance at (elastic) scale

Availability alone isn’t enough; the system also has to remain performant at scale. And in many industries, the ability to scale elastically up and down is required to maintain a high level of performance while keeping costs under control.

To return to our e-commerce example, when Black Friday rolls around, your metadata store will likely have to handle loads at least 2x to 3x higher than what it sees on an average day. But that extra infrastructure also means extra cost.

Ideally, you want to build or buy a system that can smoothly scale up and down to meet demand on “spike” days like Black Friday while minimizing costs on the less busy days.

Automation is a key consideration here as well. Particularly in industries where the traffic spikes are less predictable, it’s important to have a system that either auto-scales itself or at least that can be controlled programmatically so that you can write automations to scale it up and down, rather than having to log in and manually add and remove nodes every time traffic goes up or down.

Data domiciling and localization

Building a multi-region system in which data can be tied to specific locations has obvious advantages in terms of both availability and performance. A database that is spread across multiple regions can survive a regional outage without downtime or data loss (provided the replication is configured properly), and location copies of user metadata in the regions closest to the users who access it also improves performance by reducing latency.

However, in many cases, those advantages are beside the point. At enterprise scale, a multi-region solution is often required to maintain compliance with ever-changing global data sovereignty laws. User metadata stores often contain PII and other types of user-specific data that may be subject to national or local regulations, particularly in more heavily-regulated industries such as finance, real-money gaming, banking, healthcare, government services, etc.

It is critical that you consider data localization as a part of your metadata store if you operate in any regions that have, or are likely to enact, laws governing how and where user data can be stored.

Consistency guarantees aka ACID

Strong consistency guarantees are critical for transactional workloads, but they matter for user metadata, too.

Again, let’s return to our ecommerce example. Imagine we’ve built our user metadata store using a three-node system with eventual consistency. Now imagine a user who is browsing the site sees and item they like, and hits the button to add it to their wish list. In the application, a microservice sends a query to node 1, the closest node, to add that SKU to the user’s wish list in the metadata store. The user, who lives geographically closer to node 2, then navigates to their wish list, and doesn’t see the item they just added because their query is reading from node 2, which hasn’t yet received the update from node 1.

This is a relatively minor example of the potential consequences of eventual consistency in your metadata store, but even something like this degrades the user experience and erodes user trust. Maintaining consistency between nodes in a distributed system is critical to maintaining a consistent, predictable user experience.

In the context of databases, this means that you’ll likely want a system with strong consistency guarantees. In practice, that generally means you’ll need a relational database management system.

SQL support

Speaking of relational databases, support for SQL is another important factor to consider when building a user metadata store. Aside from the consistency guarantees mentioned above, SQL has another significant advantage: almost everybody already knows it.

Building your metadata store on a SQL system will make it easier to onboard and ramp up new team members. It can also make it easier to design and optimize for system performance, given that you have roughly five decades of SQL experience and wisdom to draw upon.

There are, of course, different flavors of SQL, and it’s important to keep in mind that a distributed SQL system – which is likely what you’ll need here – will often need to be optimized differently from legacy single-instance relational databases. Even though engineers are almost certain to be familiar with SQL, it can still take time to start working with a distributed mindset if they haven’t worked with distributed SQL before.

Still, work with a familiar language is almost always going to be faster and easier to ramp than trying to get engineers to learn a new and different query language.

Change data capture (CDC)

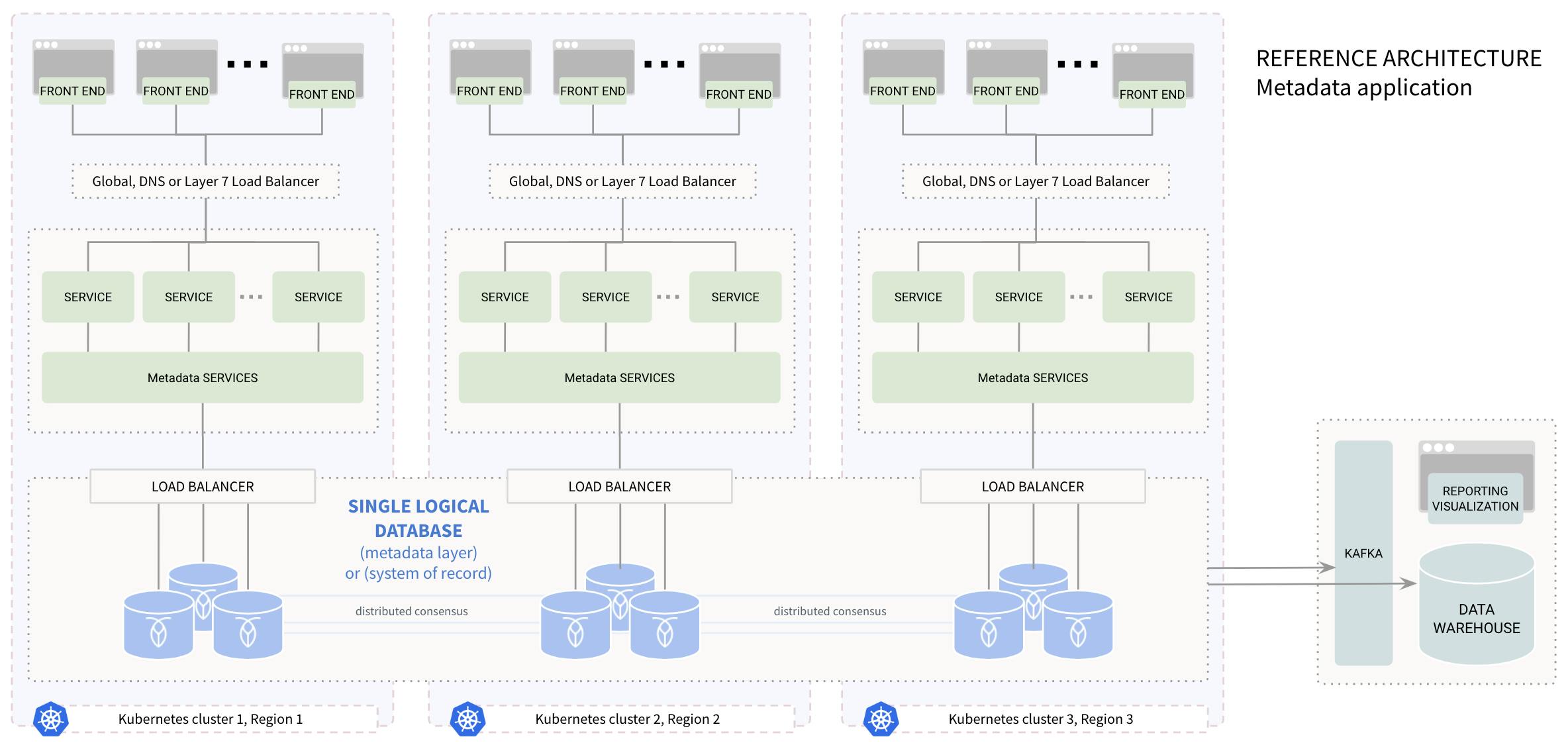

Your metadata store will typically contain a wealth of data about user preferences and interactions with your application, and this data can be valuable for everything from analytics to marketing to AI modeling. However, you don’t want these applications to be querying your production database and impacting the user experience, so you’ll need a change data capture solution that enables you to easily sync the data in your metadata store to other stores and services such as Kafka, a data warehouse, etc.

Build or buy?

Once you’ve assessed all of these needs, you’re faced with a choice: buy a solution, build something bespoke, or try some combination of the two.

All of these approaches can be valid, and you will find examples of all of them succeeding. Particularly at enterprise scale, however, buying a solution that ticks all of these boxes is highly likely to be both easier and cheaper than trying to build and maintain one in the long run. Consider, for example, the costs of manual sharding:

And that is just one of the many difficult technical problems you’ll need to solve to build a bespoke system like this.

Broadly speaking, we’ve found that many companies prefer to keep their engineering teams focused on building features that add to the business value of their product, rather than having to distract them with building and maintaining a distributed database system with all of the bells and whistles described above that make it optimal for metadata storage at enterprise scale.

In fact, you can even go a step farther here and opt for a managed service that takes most of the ops and management work off of your team’s plate as well. Choosing a purpose-built solution like CockroachDB can ensure you get all the features you need, and choosing a managed service means there’s even less chance of that dreaded midnight or weekend PagerDuty alert.

At the end of the day, building and maintaining a great product and enterprise scale is hard enough. Why worry about building and maintaining a complex system for your user metadata store when an ideal solution already exists, and you can have it managed by the same experts who built it?